With the climate crisis raging on, I bet you are all thinking about the penguins. How are they going to find food with their ecosystem collapsing? It only makes sense that us humans take our responsibility to feed them. However, it would be preferable not to feed them manually, since Antarctica is a somewhat inhospitable place. And what kind of fish do penguins like to eat anyway? Clearly, the best (and most practical) solution to this problem is to apply machine learning, specifically multi-armed bandits, to build an autonomous self-improving fish-recommender system.

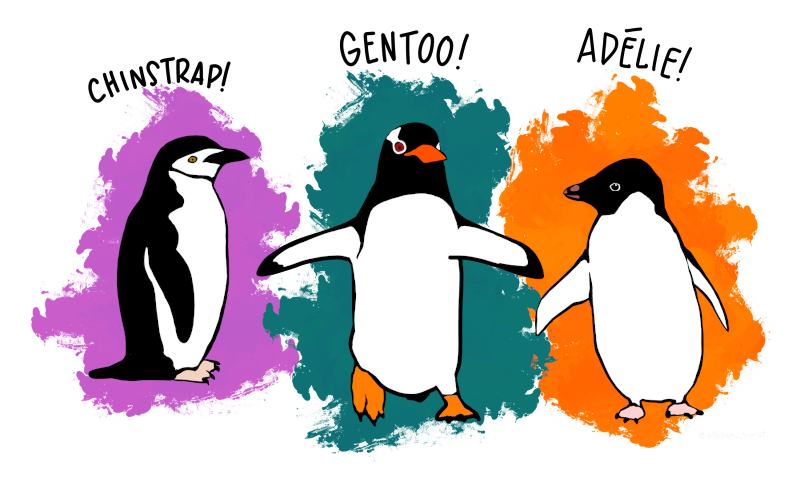

Figure 1: The Palmer penguin species hungry for fish. Artwork by @allison_horst.

The Palmer penguins and their diet

Before we start implementing machine learning algorithms, let us take some time to think about the problem we are trying to solve. The penguins in need of food are the Palmer Penguins, comprising three species: Adelie, Chinstrap and Gentoo with different traits and diets. These penguins are notoriously picky when it comes to food, and won’t eat any kind of fish thrown their way (even when threatened with extinction). Additionally, they refuse to eat fish that has been so much as nibbled on by other penguins (Yes, they are that picky).

Our rescue operation has all the logistics in place to grow and deliver mullets, anchovies and sardines. But which kind of fish is the best one to serve? Since we care about the penguins and their health, we want to serve fish that best matches their dietary preferences. Unfortunately, (assuming your ethological knowledge is as poor as mine) we have no prior knowledge about which penguin likes which fish. And since we don’t speak penguin we have to somehow learn their preferences by trial and error. Hence the machine learning approach to this problem is to build a system that

- Selects a fish to serve approaching penguins.

- Observes if they eat it or not.

- Uses that feedback to learn the penguins preferences.

- Improves future fish selection based on the learned preferences.

A system that learns the preferences of users is commonly referred to as a recommender system, and we are going to build one by treating the problem as a multi-armed bandit. The key concepts in a bandit problem is an agent which learns a decision strategy (or policy) by interacting with an environment. The name itself comes from the idea of a gambler (the agent) who tries to figure out the best slot machine (or “bandit”) to play in a casino (the environment). It is assumed that different bandits have different outcome probabilities, and that the gambler does not know these probabilities beforehand. The only way for her to figure out the best (or perhaps least worst, depending on your take on gambling) bandit is by trial and error. But how to best spend her funds for the highest expected return? This setting embodies the explore/exploit dilemma. If she spends it all on the first promising bandit she encounters, she may never discover a better one further down the hallway. If she instead spreads her funds across too many bandits, she will waste too much on unfavourable bandits and quickly go broke. The key is thus to balance exploration and exploitation in a clever way to maximise the expected return. In our case the fish-feeding system will try to learn the best policy for serving fish by interacting with the penguin population. We will see how to implement the agent later on, but before we do that let us create the environment which the agent will interact with.

The Antarctic environment

The job of the environment will be to simulate the outcomes of feeding fish to penguins. And to do that we need a population of penguins and a model of their dietary preferences. The Palmer penguin data set will do nicely as a population, so let us begin with downloading it and putting it into a DataFrame. We are going to use Julia to implement our recommender system, which is a very nice language for processing data and implementing machine learning algorithms.

using DataFrames, CSV, HTTP

using Distributions, Statistics, ConjugatePriors

using Plots, StatsPlots

download_data(url) = begin

body = HTTP.get(url).body

csv = CSV.read(body, missingstrings = ["NA"])

df = DataFrame!(csv) |> dropmissing

# We rename species column to speciesName and use the

# species column to denote integer class labels.

rename!(df, :species => :speciesName)

df[!,:species] = begin

unique_species = sort(unique(df.speciesName))

ids = Dict(s => i for (i, s) in enumerate(unique_species))

map(s -> ids[s], df.speciesName)

end

df

end

url = "https://raw.githubusercontent.com/allisonhorst" *

"/palmerpenguins/master/inst/extdata/penguins.csv"

palmer = download_data(url);

With the data downloaded, let us have a look at the population distribution.

Figure 2: The penguin population is somewhat skewed. Adelie is the most common species and the Chinstrap the least common one.

Unfortunately, the Palmer data set does not contain any dietary preferences, so for the sake of this article let us say they are given by the table below. It denotes the probability of a penguin of a particular species eating a specific kind of fish when presented with it.

| Adelie | Chinstrap | Gentoo | |

|---|---|---|---|

| Mullet | .50 | .50 | .70 |

| Anchovies | .60 | .95 | .1 |

| Sardines | .15 | .15 | .95 |

Let us now encode this information in Julia. We will do this with a struct PalmerEnv that wraps the data frame and probability table. The data frame is stored as is, while the probability table is stored as a matrix of Bernoulli distributions to make sampling convenient. Additionally we define some convenience functions and make the struct callable. When called with a fish and a penguin it returns either true or false indicating whether the penguin ate the fish or not.

struct PalmerEnv

penguins::DataFrame

eat_probs::Matrix{Bernoulli{Float64}}

end

PalmerEnv(penguins, eat_probs) =

PalmerEnv(DataFrame(penguins),

Bernoulli.(eat_probs))

(env::PalmerEnv)(fish, penguin) =

rand(env.eat_probs[fish, penguin.species])

Base.size(env::PalmerEnv, kwargs...) = size(env.penguins, kwargs...)

Base.getindex(env::PalmerEnv, i) = env.penguins[i,:]

Base.rand(env::PalmerEnv, n::Integer = 1) = env[rand(1:size(env, 1), n)]

We can now instantiate the environment and simulate from it to see which kind of fish is more popular.

eat_probs = [

.50 .50 .70

.60 .75 .1

.15 .15 .80

]

env = PalmerEnv(palmer, eat_probs)

fishes = ["Mullet", "Anchovies", "Sardines"]

simulations = begin

num_sims = 10000

df = rand(env, num_sims)

df[!,:fish] = rand(1:length(fishes), num_sims)

df[!,:eaten] = env.(df[!,:fish], eachrow(df[!,Not(:fish)]))

df

end

fish_eat_probs =

by(simulations, :fish, :eaten => mean) |>

df -> sort(df, :fish)

Figure 3: Simulating from the environment shows that the mullet is the most popular fish, followed by sardines.

Aha! The mullet is the most popular fish with the locals. This makes sense due to the imbalance of the species; the preferences of Adelie and Gentoo are more impactful due to their large population sizes. With the problem set up and the environment implemented, we are now ready to dig into multi-armed bandit problems in more detail and implement the agent.

Bayesian bandits

As briefly touched upon in the introduction, multi-armed bandits are a problem framework in which we want to learn to make optimal decisions. In our case we want to decide which fish to feed to the penguins, but do not know which fish is most likely to be eaten. We have to explore and serve different fishes to know how well received they are, but as soon as we know the penguins preferences we want to exploit them and focus on serving the most popular choices. Solving a bandit problem can hence be formulated as inferring the probability of success given an action, a task beautifully described in a Bayesian framework.

Let \(\theta\) denote the vector of success probabilities for the possible actions. The goal of the agent will be to infer these by playing \(T\) rounds. Before the first round, the agent starts off with a prior belief \(P(\theta)\) over the success probabilities, and in each round it chooses an action \(a_t\), experiences reward \(r_t\) and updates its beliefs through Bayes rule

\[ P(\theta \vert a_t, r_t) = \frac{P(r_t \vert a_t, \theta) P(\theta)}{P(r_t \vert a_t)}. \]

The posterior from round \(t\) is then used as a prior for round \(t+1\), and the process is repeated. This means the agent becomes a bit more certain about the world for each round played. To clean up our notation a bit, we will denote observed action/reward pairs as \(\mathcal{D_t} = (a_t, r_t)\) going forward. Once we have computed \(P(\theta \vert \mathcal{D_{1:T}})\) the optimal action (given our model) is given by

\begin{equation} \begin{split} a^* & = \text{arg max}_a \mathbb{E}[r \vert a, \theta, \mathcal{D_{1:T}}]\\ & = \text{arg max}_a \int P(r \vert a, \theta) P(\theta \vert \mathcal{D_{1:T}}) d\theta. \end{split} \end{equation}

The problem consequently boils down to computing the posterior and its predictive distribution efficiently. A simple model that allows for this is the Beta-Bernoulli model. As the name implies, it models binary outcomes with \(r \vert \theta \sim Bernoulli(\theta)\) with prior \(\theta \sim Beta(\alpha_0, \beta_0)\). This model is appealing due to its simplicity and its conjugacy property which lets us express the posterior in closed form \(\theta \vert r \sim Beta(\alpha_0 + r, \beta_0 + 1 - r)\). But we can only update the posterior if we observe a reward, and that only happens after we choose an action. So how to decide which action to take each round? It is not an easy question to answer, due to the explore/exploit dilemma mentioned earlier. A good strategy is nevertheless critical to achieve good performance. Fortunately, there is an elegant yet powerful strategy we can use called Thompson sampling.

Explore/exploit through Thompson sampling

Thompson sampling is an algorithm for tackling the explore/exploit dilemma which has proven very useful when solving multi-armed bandits problems. It is performed in each round by sampling from the posterior and acting optimally conditioned on the sampled value. That is, for round \(t\) we sample \(\hat{\theta} \sim P(\theta \vert \mathcal{D_{1:t-1}}) \) and select action \(a_t = \text{arg max}_a\) \(\mathbb{E}[r \vert a, \hat{\theta}] \). We then observe reward \(r_t\) and update our posterior, which means samples for the next rounds will come from a better informed posterior.

When starting off, we have not observed any data, so \(P(\theta \vert \mathcal{D}_{1:t-1}) = P(\theta)\). Given that we picked \(P(\theta)\) to be decently uncertain, the posterior samples will be very diverse, leading to the agent exploring. But as the agent gathers more data, the posterior will concentrate over the best outcomes, smoothly transitioning to a more exploiting behaviour. This might feel a bit abstract, but hang in there, there are some helpful visualisations coming up shortly.

Let us look at an implementation of the Beta-Bernoulli model. Similar to the environment, we implement the agent as a struct with some convenience functions. We also make it callable, which is where the magic happens. It receives a function which it calls with an action to receive a reward. It then updates the posterior (Using the ConjugatePriors.jl package. No need to type out all that error prone math!) and returns the chosen action. Note how Thompson sampling simplifies a lot for the Beta distribution, since the expected reward is simply

\(\mathbb{E}[r \vert a, \hat{\theta}] = \hat{\theta}_a\) where we use subscript to denote indexing.

struct BetaBernoulli

pθ::Array{Beta{Float64}}

end

BetaBernoulli(K) =

BetaBernoulli([Beta(1, 1) for _ in 1:K])

Base.length(b::BetaBernoulli) = length(b.pθ)

Base.iterate(b::BetaBernoulli) = b.pθ[1], 1

Base.iterate(b::BetaBernoulli, i) =

i < length(b) ? (b.pθ[i+1], i+1) : nothing

(b::BetaBernoulli)(act) = begin

θ̂ = rand.(b.pθ)

a = argmax(θ̂)

r = act(a)

b.pθ[a] = posterior(b.pθ[a], Bernoulli, [r])

a

end

Running and evaluating the agent

Now all the pieces are in place to run our agent! But one final question remains. How can we assess its performance? Popular metrics such as accuracy and MSE cannot be used as-is, since the observations are not collected beforehand. Instead, we will use the concept of regret minimisation. Regret can intuitively be thought of as how much worse the algorithm performed compared to the best choice in hindsight, which we express as the difference between the expected reward of the optimal action and the taken action. The regret accumulates for each round, and we define the cumulative regret for round \(T\) as

\begin{equation} \begin{split} \rho_T & = \sum_t^T \mathbb{E}[r \vert a^*_t] - \mathbb{E}[r \vert a_t] \\ & = \sum_t^T \text{arg max}_k \\ \theta_k - \theta_{a_t} \\ & = T \times \text{arg max}_k \\ \theta_k - \sum_t^T \theta_{a_t} \end{split} \end{equation}

where \(a^*_t\) denotes the best action and \(a_t\) the taken action in round \(t\). Hence, if we always pick the best action \(a_t= a^*_t\) and \(\rho = 0\). Since we are not omniscient, this is not possible. So instead we will settle with making \(\rho_T\) as small as possible asymptotically.

Let us run our Beta-Bernoulli model and plot its posterior and accumulated regrets to see how it all fits together. The run function below takes the environment env, an agent, the highest expected reward \(\theta_{max} = \text{arg max}_k \\ \theta_k\), and an expected_reward function that returns \(\mathbb{E}[r \vert a]\). In return it produces the per-round regrets.

run(env, agent, θmax, expected_reward; rounds) = begin

map(1:rounds) do _

penguin = first(rand(env))

a = agent(fish -> env(fish, penguin))

θ = expected_reward(a)

ρ = θmax - θ

end

end

expected_reward(fish) = fish_eat_probs.eaten_mean[fish]

agent = BetaBernoulli(length(fishes))

θ_max = expected_reward(argmax(fish_eat_probs.eaten_mean))

regrets = run(env, agent, θ_max, expected_reward; rounds = 1000);

Figure 4: Regret increases quickly at first, but plateaues as more data is collected. The agent successfully learns that mullets have the highest probability of being eaten, but since the success probabilities are very similar for this problem, it is difficult for the model to become truly certain.

The agent successfully infers the success probability of the most popular fish! As we can see, the regret accumulates every time the agent selects a sub-optimal fish to serve, but eventually starts to converge. We also see how the posterior starts off completely uniform but soon enough reflects the “goodness” of the fishes. However, the agent had to run for quite a long time before starting to converge. This is partly because of the success probabilities being very similar, which requires more exploration for good parameter estimates, but also because the model is very simple.

Ending notes

While the algorithm we implemented is quite simple, we talked about quite a few different concepts in this article. Let us take a step back and recap what we covered.

We saw how recommender systems can be framed as solving a multi-armed bandit problem, and how to use Bayesian methods to solve them. We used a Beta-Bernoulli model to model success probabilities, and applied Thompson sampling to tackle the exploration/exploitation dilemma. Finally we saw how to evaluate the model by measuring its cumulative regret.

While the Beta-Bernoulli model manages to infer that the mullet is the most popular fish, it takes an awful long time for it to do so. It would be preferable not to waste 1000 fishes before the penguins actually start eating properly. One reason for the slow convergence is the model’s simplicity: it assumes a single best fish to serve. It has no way of figuring out that the different penguin species prefer different kinds of fish.You may have noticed that the mullet is in fact nobody’s favourite; it is just the most likely fish to be eaten overall! Fortunately, there is an extension to the bandit framework known as contextual multi-armed bandits that allows us to vastly improve upon this by learning individual penguin preferences. We will see how to do this in the second part.

Thank you for reading! If you have any questions or comments, please let me know.